This article explains how to get code coverage from asp.net website, web application , web service, stanalone executable etc..

Table of contents

- Introduction

- Code coverage steps

- compiling website

- instrumenting all dlls or executable

- start vsperfcmd

- run application

- stop application

- stop vsperfcmd

- convert code coverage file to xml

- use report generator to get html formatted report

- Automating the code coverage process via batch script

- Conclusion

- History

Introduction

Here is the definition of code coverage (courtesy – wikipedia)

In computer science, code coverage is a measure used to describe the degree to which the source code of a program is executed when a particular test suite runs. A program with high code coverage, measured as a percentage, has had more of its source code executed during testing which suggests it has a lower chance of containing undetected software bugs compared to a program with low code coverage.Many different metrics can be used to calculate code coverage; some of the most basic are the percent of program subroutines and the percent of program statements called during execution of the test suite.

In my earlier project, we used to write unit testcases and get code coverage out of that. But for legacy project, we found it hard to write unit testcases as we have to refactor the code which is time consuming task. So we decided to set up a process, that gets us code coverage from manual or automated testing of website.

Here is the detailed explanation of steps.

Code coverage implementations.

Here, I will explain , how to get code coverage for asp.net website and stanalone executable. I have chosen asp.net website instead of web application, because

- in earlier project we had website, and we have not migrated that to web application.

- in case of web application, we already have dlls, so it is easier to get code coverage (saves one step of the whole process)

For asp.net website, we will only have .aspx and aspx.cs or aspx.vb files along with .config file. If you have any project reference, you will also have bin folder with dlls inside.

For sake of understanding, I have taken an example of website. This is a simple calculator website and standalone executable. Which takes 3 input, operator, 2 inputs and calculates value.

It has 3 projects.

- “CalcDLL“, this is a library project. Which has logic of calculating the value from inputs. It has one public function “Calculate” which takes operator and its constructor takes 2 input. “Calculate” function returns result.

- A website (“CalculateWebsite“), what has 2 textboxes and a dropdownlist. This takes input from user and calculates value. It has project reference of CalcDLL. It has a publishing profile, which simply copies file to a separate location. Here it points to “C:\demo\” .

- A console project (“CalcConsole“), which takes 3 command line argument. E.g. “CalcConsole.exe + 1 1″, output will be Result = 2. This is self explanatory.

Note:

- This project can be testable via unit testing. I have skipped this, because, this article demonstrates code coverage via auto or manual testing of app (sort of functional testing).

- I have set paths of every tool required in my environment variable, that is why I can execute those command directly.

Pre steps for getting DLLs and EXEs.

Website

For website, first, we need to publish to local directory. Since it will not copy “.pdb” files of dependent project reference, we need to manually copy that file from bin folder of website project (location – \CalculateWebsite\bin\Calc.pdb) to published dir (C:\demo\bin).

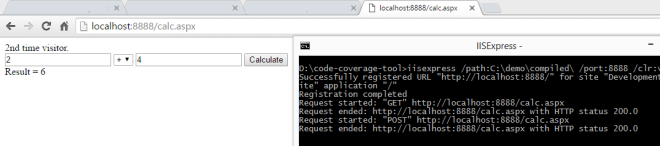

Here is the screenshot of the website.

Compiling website:

For website, we will have only “.aspx.cs” and “.aspx” files, and since instrumentation works on only dll or exe, we need to convert the web site to web application or we need to precompile the project. Here is the command to do so.

aspnet_compiler -p %original_dir% -v / %compiled_dir% -d

- -p is for physical path

- -v for virtual directory

- -d is for debug version.

Here is the screenshot of website, after compiling.

This command will generate a dll with name similar to this – App_Web_m55qrtmf.dll . And it will have only “aspx” file and config file. Because it compiled .aspx.cs file to that dll.

Here is the screenshot .

For console project

For console, we need to build in debug mode.

Note: When we go for code coverage, we always need to have debug version of project. Instrumentation process needs DDL and PDB files.

Instrumenting dlls:

Here is the command to instrument.

vsinstr /coverage

Location for vsinstr.exe is “C:\Program Files (x86)\Microsoft Visual Studio 12.0\Team Tools\Performance Tools” . You can set PATH variable of your system to run this command directly. “vsinstr” stands for visual studio instrumentation tool.

Since I have VS 2013, path of vsinstr is above, for you can try replacing 12.0 with your versions.

For websites or app, we may have multiple dlls, so we need to do that for all dlls.

In case of console project, following is the exact command.

vsinstr /coverage Calc.dll vsinstr /coverage CalcConsole.exe

In case of website, we need to execute following command.

vsinstr /coverage C:\demo\compiled\bin\Calc.dll vsinstr /coverage C:\demo\compiled\bin\App_Web_m55qrtmf.dll

Here is the screenshot of vsinstr output.

When you run vsinstr, it will add hooks to functions, conditional block etc.., and that is why dll and exe size will increase. It will keep a back of those file with “.orig” extension.

Here is the screenshot of files after running vsinstr . If you compare with previous, you can see that dll and exe size has grown.

Start vsperfcmd:

Next, we will start vsperfcmd. “vsperfcmd” stand for visual studio performance command line tool. Following is the command to run vsperfcmd. Location for vsperfcmd is same as vsinstr. If you set path in environment variable, it will be easier to execute them.

vsperfcmd /start:coverage /output:C:\demo\compiled\demo.coverage.

- /start:coverage – we are telling to start in coverage mode.

- /output – location of .coverage file.

Here is the screenshot of output.

Running the application:

For console project, you can start testing directly from command line. E.g. say we want to test “+” and “-“. Then use following command to test.

"CalcConsole.exe + 2 5" .

Here “CalcConsole.exe” is the output of CalcConsole console project. You need to launch exe from this location – “\CalcConsole\bin\debug\CalcConsole.exe“.

For website project, you need to start IISExpress and run the website from there and start testing manually. I have set path of IISExpress (C:\Program Files\IIS Express\) in my enviroment. Following is the command to run the IISExpress.

iisexpress /path:C:\demo\compiled\ /port:8888 /clr:v4.0

- /path – physical path to website location

- /port – port to listen.

- /clr – clr version to use.

Here is the output of command. To stop IISExpress, you need to press “q” .

As you can see, I have tested only “+” operator. There is only a POST request.

stop application:

For website, press “q” to stop web server.

For console project, just stop testing 🙂 .

stop vsperfcmd:

Now, we need to stop vsperfcmd. Here is the command to do that.

vsperfcmd /shutdown

This will collect statistic about testing and generate “demo.coverage” file, the name we have at start of coverage process.

Here is the screenshot for this.

convert code coverage file to xml:

This coverage file can be opened in ultimate or premium edition of visual studio. Here is the screenshot for this.

To skip this step, you can use VS (with required versions), and export to xml version of file. This coverage file is a binary file, so it need to be converted to readable format to get nicely looking html report.

Or you can use following code snippet to covert to XML file.

// args[0] - input path of demo.coverage file // args[1] - output directory for converted xml file string wspath = args[1] + "coverage.xml"; CoverageInfo coverage = CoverageInfo.CreateFromFile(args[0]); DataSet data = coverage.BuildDataSet(null); data.WriteXml(wspath ); data.Dispose(); coverage.Dispose();

Here you need DLL dependency of “Microsoft.VisualStudio.Coverage.Analysis.dll” which is part of Visual studio* . Location for those dependent dlls is “C:\Program Files (x86)\Microsoft Visual Studio 12.0\Common7\IDE\PrivateAssemblies”

* – again those are part of required vs version, stated above.

I have written a simple utility using above codebase, which converts to xml file. Here is the command to execute that.

coveragereport C:\demo\compiled\demo.coverage C:\demo\compiled\

This will create “coverage.xml” file there.

HTML report generator:

For this you need an open source tool “ReportGenerator” . This will convert the xml report to nicely looking html report. Here is the command to execute.

reportgenerator -reports:C:\demo\compiled\coverage.xml -targetdir:C:\demo\compiled\report

Here is the screenshot for this.

If you check the directory, it has created html file for reports in “C:\demo\compiled\report“. You can check, the report by opening html file (index.htm).

Now, you can see “SumEvaluator” has 100% coverage.

Here is the screenshot of html file.

Automating the code coverage process via batch script:

To automate this process, I have created a batch file “start-coverage.bat” which takes one input, path of website. It will take input of website location and calculate code coverage. You just have execute batch file like this.

Assumption: Required path has been set up in executing system environment variable. “vsinstr”,”vsperfcmd”,”coverage_to_xml_tool”,”reportgenerator” path need to be set in environment variable.

start-coverage.bat C:\demo

Conclusion

This process will simplify getting coverage report from functional testing, be it manual or automatic. Batch file will make easier to get it done. Only manual step here is to copy the pdb file to published location.

Here is the link for code location. This contains source code for 3 projects + 1 project ( tool to convert coverage file to xml file).

History

I have tried many helpful suggestions from microsoft MSDN link to achive this. Following are few of them.

- deploying the website to IIS server and using “vsperfclrenv /globaltraceon” to start getting coverage.

- attach w3wp to vsperfmon.

- use vsperfreport to get vsp file and then convert.

But unfortunately none of them worked for me. That encouraged to write me this blog and help other who are looking for it.

References

- https://blogs.msdn.microsoft.com/graycode/2005/05/10/off-road-profiling-of-asp-net-applications/

- https://msdn.microsoft.com/en-us/library/dd299398(v=vs.90).aspx

- https://blogs.msdn.microsoft.com/phuene/2009/12/01/programmatic-coverage-analysis-in-visual-studio-2010/

- https://msdn.microsoft.com/en-us/library/aa985627.aspx

.

.